Apple Vision Pro review: The infinite desktop

In 2000, Paradox Press published "Reinventing Comics: How Imagination and Technology Are Revolutionizing an Art Form." The book was Scott McCloud’s follow-up to his seminal 1993 work, "Understanding Comics: The Invisible Art." Where the earlier title explored the history and visual language of sequential art, the second volume finds the medium at a crossroads. The turn of the millennium presented new technologies that threatened to upend print media.

Along with exploring existing vocabulary, McCloud coined a few terms of his own. Far and away the most influential of the bunch was “infinite canvas,” which the author explains thusly:

There may never be a monitor as wide as Europe, yet a comic as wide as Europe or as tall as a mountain can be displayed on any monitor, simply by moving across its surface, inch-by-inch, foot-by-foot, mile-by-mile . . . In a digital environment there’s no reason a 500 panel story can’t be told vertically — or horizontally like a great graphic skyline.

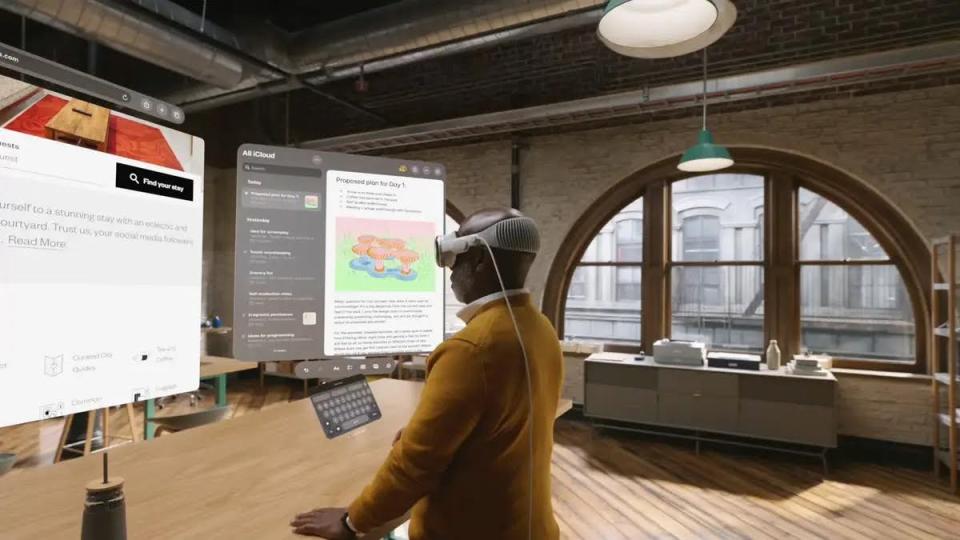

The infinite desktop

Image Credits: Apple

As the realities of corporate control settled into the internet, the notion of infinite canvas has somewhat fallen out of popular memory (inhabit a medium long enough and you'll eventually bump up against its boundaries). Over the past eight months, however, the term is creeping back into popular use, courtesy of Apple. The company loves a catchy slogan — and they think you’re going to love it, too. McCloud’s term presciently touches upon some of the larger paradigm shifts the company is hoping to foster with its new $3,500 headset.

"Vision Pro creates an infinite canvas for apps that scales beyond the boundaries of a traditional display and introduces a fully three-dimensional user interface controlled by the most natural and intuitive inputs possible — a user’s eyes, hands, and voice," Apple wrote in the first Vision Pro press release.

But where McCloud’s conception of the infinite canvas specifically applied to breaking free of the paper page by embracing the monitor, Apple’s vision of “spatial computing” is an effort to go beyond that monitor. In some ways, the product succeeds brilliantly, but in much the same way that holding your hands up in one of Apple’s “Virtual Environments” displays artifacting, that vision is currently still rough around the edges.

Image Credits: Apple

One thing to remember when discussing the Vision Pro is that it’s a first-generation product. The notion of first-gen products carries a lot of baggage -- both good and bad. On the positive side is ambition and excitement -- the glimpses of the future that flash in front of your pupils in beautiful 4K. On the opposite end is the very real notion that this is the first volley in a much larger, longer war. First steps are often fraught with peril.

"A journey of a thousand miles begins with a single step," according to a common paraphrase of an old Chinese proverb. But maybe The Proclaimers put it best: “Da-da dum diddy dum diddy dum diddy da da da.”

Image Credits: Cory Green/Yahoo

Another thing that needs to be stressed in any conversation about Apple’s first foray into the world of spatial computing is that it’s bold. When I did a TV interview about the product yesterday, the host asked me whether I agreed with Apple’s sentiment that this was their most ambitious product to date. Surely, he suggested, it couldn’t be more ambitious than the iPhone a decade and a half ago.

I hesitated for a moment, but I now feel comfortable saying that the Vision Pro is -- in some key ways -- the more ambitious product. The iPhone was not the first truly successful smartphone. The precise origin point is somewhat nebulous, depending on shifting definitions, but devices from companies like BlackBerry and Nokia came first. I might also point out that the App Store was created for the iPhone 3G the following year. None of this diminishes the truly transformative impact the original handset had on both the industry and the world (you would be a fool to deny such things), but there were plenty of popular smartphones in the world by the time the iPhone arrived in 2007.

The story of extended reality, on the other hand, has been punctuated by decades of failed promise. That there hasn’t been a truly successful extended reality headset except the Meta Quest (which occupies a wholly different spot in the market) is not for lack of trying. Some of the industry’s biggest names, including Google and Samsung, have taken several swings at the space with than satisfactory results.

Apple could have easily sauntered down the path blazed by Meta. A (relatively) low-cost product that traded on the company’s years of mobile gaming experience seems like an easy enough pitch. The Vision Pro is decidedly not that device. It was telling that gaming barely warranted mention in the product’s WWDC unveiling over the summer. That’s not to say that games can't and won’t play a central role here (Apple, frankly, would be stupid to turn its back on that little $350 billion industry). But they're very much not the tip of the spear.

The future of multitasking?

Image Credits: Cory Green/Yahoo

In the headset’s current iteration, games are not the primary driver. The company instead presented a future wherein the Vision Pro is, in essence, your next Mac. The infinite canvas of Apple’s design is more of an infinite desktop. There's a sense in which it serves as a reaction to the smartphone’s multitasking problem. It certainly feels as though we’ve reached the upper limits of smartphone screen size (admittedly not the first time in my career that I’ve suggested this). This is why, suddenly, foldables are everywhere.

The Vision Pro paints a picture of a world in which your computer’s desktop is limited only to the size of the room you’re in. Of all the consumer headsets I’ve tried, Vision Pro offers the most convincing sense of points in space. This may not sound like a big thing, but it’s foundational to the experience even more so than those high-res displays. I’ve tried plenty of headsets over the years that promised a movie theater in a compact form factor. Sony, in particular, has been intrigued by the notion.

Those experiences feel exactly like what they are: a small display mounted in front of your eyes. From this perspective, I wholly understand early criticism that the product is effectively like having an iPhone strapped to your face. When people tell you that you have to experience the Vision Pro to appreciate what it can do, this is what they’re talking about.

Image Credits: Meaningful things

This is the core of spatial computing -- and by extension, the Vision Pro. It’s the "Minority Report" (really need to update my pop culture references) thing of picking up and moving computer files on a three-dimensional space in front of you. Here that means I can be sitting at my coffee table banging out an email on a Bluetooth keyboard, open Apple music and stick it off to the side. If I really want to go into multitasking overdrive, I can wallpaper my room in windows.

This is one of the most interesting contrasts at the center of the experience. It’s neat, but it’s not, you know, sexy. It’s an extension of desktop computing that is potentially the future of multitasking. There are absolutely people for whom the phrase “the future of multitasking” presents the same frisson I get when I put on an Alice Coltrane record, but for most of us, multitasking is a necessary evil. If the Vision Pro is, indeed, the future of the Mac, then its ultimate destination is as a utility or tool.

It’s the device you use for document writing, email, Zoom and Slack. And then, at the end of the day, you watch "Monarch" on Apple TV or play a bit of the new Sonic game on Apple Arcade. What it decidedly is not at present, however, is the wholly immersive gaming experience of a "Ready Player One."

Mac to the future

Image Credits: Apple

No one can do ecosystems like Apple. It’s a big part of the reason why many most people don't stop at a single product. Once Apple gets its hooks in you, you’re done. I remain convinced that the threat of green bubbles is one of the primary drivers of iPhone sales. The company has long prided itself in the way its devices work together. This is a big benefit of developing both hardware and software. That phenomenon has only accelerated when the company started building its own chips in-house.

Image Credits: Apple

For the record, the Vision Pro sports an M2 (along with the new R1 chip, which does the heavy lifting of processing the device’s various sensors). Courtesy of Apple’s annual release cadence, that's now last year’s chip. But for what the headset requires, it’s plenty capable. The Vision Pro is an interesting entry into the Apple ecosystem, in that it operates both as its own computing device and as an extension of the Mac. From that standpoint, it’s perhaps a logical extension of a feature like Sidecar, wherein an iPad can double as a secondary laptop Display.

Image Credits: Apple

The Vision Pro unit Apple loaned to TechCrunch has 1TB of storage. That’s the top end of the storage options, which bumps the 256GB model’s $3,500 starting point up to $3,900 — but what’s $400 between friends? That’s coupled with 16GB of RAM. We’re definitely not talking Mac Studio specs here, but we are looking at a fully capable stand-alone computing device in its own right. Pair a Magic Keyboard and Trackpad, and you can probably get away with leaving your MacBook at the office for the day.

The Vision Pro also effectively doubles as a 4K Mac virtual display. I currently have a Mac Studio on my desk at home. Pairing the two is about as easy as connecting a pair of AirPods. Look up to see a green checkmark floating in space, select it with a pinch of your index finger and thumb and select the icon featuring a Vision Pro and Mac.

Once paired, the real-world monitor will turn off, replaced by the virtual version. You can move this around in space and pair it with additional windows powered by the headset. You can approximate a multi-monitor setup in this way. These don’t actually connect to one another in a manner similar to using your iPad as a desktop extension, nor is it currently easy to drag and drop between them. But it’s still a nice, effective use of the infinite desktop.

I also used the product with a MacBook Pro during my second demo with Apple. The effect is far more pronounced in this scenario, owing to the Mac's significantly smaller display. I can certainly see a point in the not so distant future where the Vision Pro’s status as a travel companion means being far less concerned about the spatial constraints of your MacBook Air. Take it on the plane for movies (assuming you’re cool being one of the first people attempting to break that social stigma), and get some work done on it once you check into the hotel.

Your body is a Magic Mouse

Image Credits: Cory Green/Yahoo

In June 1935, science-fiction writer Stanley Weinbaum published the short story "Pygmalion’s Spectacles." The work is considered by many to be one of the — if not the — first representations of virtual reality in science fiction. Weinbaum writes:

Listen! I’m Albert Ludwig—Professor Ludwig.” As Dan was silent, he continued, “It means nothing to you, eh? But listen—a movie that gives one sight and sound. Suppose now I add taste, smell, even touch, if your interest is taken by the story. Suppose I make it so that you are in the story, you speak to the shadows, and the shadows reply, and instead of being on a screen, the story is all about you, and you are in it. Would that be to make real a dream?”

“How the devil could you do that?”

“How? How? But simply! First my liquid positive, then my magic spectacles. I photograph the story in a liquid with light-sensitive chromates. I build up a complex solution—do you see? I add taste chemically and sound electrically. And when the story is recorded, then I put the solution in my spectacle—my movie projector.”

Much of what Weinbaum wrote remains almost impossibly relevant nearly a century later. Since these earliest representations, however, the subject of input has remained a big, open-ended question. The variety of peripherals that have been developed to bring a more organic mode of locomotion over the decades boggles the mind, often to comedic effect.

Again, you can pair Vision Pro to a Bluetooth keyboard, mouse and game controller, but the vast majority of your interactions will occur through one of three modes: eyes, hands and voice. The first two work in tandem, and thankfully on the whole, the system does a good job with both. One important caveat here is that hand tracking requires an external light source. If it can’t see your hands, it can’t track them.

It's a different — and perhaps more organic -- approach than the controllers on which Meta Quest has traditionally relied. What this means, however, is you’ll want at least a desk lamp on, if you’re not relying on a Bluetooth trackpad. It’s easy to imagine running into some issues there. In the plane example, you may have to be the annoying passenger keeping the overhead light on during the red eye.

Image Credits: Apple

In my own time with the device, the biggest issue came when I decided to cap the night off watching a film. Part of the registration process involves using an iPhone to get a 3D capture of your face. From there, Apple will stick in the light seal it thinks will best fit your face. Maybe I have a weird face. Maybe it’s my big nose (sound off in the comments). Whatever the case, I’ve struggled to get a fit that doesn’t let a fair bit of light in from the bottom.

This isn’t a huge issue for the most part during daytime activities (much of this stuff fades into the background as you more fully engage with the system). But in bed, as I’m attempting to watch some bad Hulu horror movie on the big screen under the stars at Yosemite, that light can create an annoying glare. This is a big potential opportunity for an enterprising accessory maker.

The primary mode of interaction with the interface goes eyes, hands. Look at an icon, button or other object to highlight it and pinch the index finger and thumb together on either of your hands. Point, click, repeat. This method works well on the whole. I’m not entirely convinced that there is a perfect method for interacting with extended reality, but to Apple’s credit, the company has developed something more than serviceable.

Pinch your fingers and swipe left or right to scroll. Pinch your fingers on both hands and pull them apart to zoom. There’s a bit of a learning curve, but it mostly works like a charm.

The efficacy of blinky-pinch goes out the window when typing. You know what a pain in the ass it is to use the Apple TV remote to type text? Well, consider what it would be like to replace that with looking and pinching. Gonna pat myself on the back here a bit and say I’ve actually become surprisingly efficient over the past few days, but it never doesn’t feel like a hassle.

This is where Siri shines. In spite of what I do for work, I’ve never been a big voice assistant person. I suspect much of that can be chalked up to living in a one-bedroom and not driving. But, man oh man, does Siri make a lot of sense here. This is the first time I can remember thinking to myself, "thank good for Siri." In the aforementioned movie scenario, I was scrolling through Apple TV+ and Hulu (by way of Disney+) and asking Siri for Rotten Tomatoes scores any time I came across something interesting.

In a more traditional home movie watching scenario, I would be doing something similar on my iPhone as I scroll through films on the iPad -- this is how we watch movies in 2024. But the voice assistant experience is genuinely a good one in this setting or when I have to write text into a search bar. It’s a strong example of Apple’s existing offerings coming together in a useful, meaningful way.

Here’s looking at . . . you?

Image Credits: Brian Heater

When you first receive the Vision Pro, the system will walk you through a brief onboarding process. It’s mostly painless, I promise. First, three circles of dots will appear, each with brighter light than the last. Here you’ll have to look at each while pinching your thumb and index fingers together. This helps calibrate eye tracking.

Next, the system asks you to hold up the front and then back of your hands to calibrate that tracking. You’ll be asked to pull the headset off to take a scan of your face. The process is almost identical to enrolling in Face ID on your iPhone. But first, a short introductory video.

The face-scan process utilizes the camera on the front of the visor to construct a shoulders-up 3D avatar. Look forward. Turn your head to the side. Then the other. Look up and rotate down. Look down and rotate up. Find some good lighting. Maybe a ring light if you have one. If you wear glasses, make a point not to squint. I apparently did, and now my Persona looks like it spent the last week celebrating the Ohio’s Issue 2 ballot measure.

This is where your Persona is born. Put the headset back on, hold your breath, pray for the best and plan for the worst. This 3D avatar approximation of your shoulders up is designed for teleconferencing apps like FaceTime and Zoom. Keep in mind, you’re wearing a visor and there’s no external camera pointing at you, so Apple will go ahead and do its best to rebuild you, somewhere within the deepest depths of the uncanny valley.

Join TechCrunch’s first work call using Apple Vision Pro Personas.

Read more on Personas here: https://t.co/znlYXuecLS pic.twitter.com/BjZKJyPbaU

— TechCrunch (@TechCrunch) February 1, 2024

I wrote about the enrollment experience in my “Day One” post, so excuse me while I quote myself for a minute:

The Personas that have thus far been made public have been a mixed bag. All of the influencers nailed theirs. Is it the lighting? Good genes? Maybe it’s Maybelline. I hope yours goes well, and don’t worry, you can try again if you didn’t stick the landing the first time. Mine? This is actually the better of the two I’ve set up so far. I still look like a talking thumb with a huffing addiction, and the moment really brings out the lingering Bell’s palsy in my right eye. Or maybe more of a fuzzy Max Headroom? I’ll try again tomorrow, and until then be mindful of the fact that the feature is effectively still in beta.

This is the version of you that will be speaking to people through FaceTime and other teleconferencing apps. This is meant to circumvent the fact that (1) you have a visor on your face and (2) there (probably) isn’t an external camera pointed at you. It takes some getting used to.

Oh, you’ll also have to take it again if you want to change your hair or shirt. I was hoping for something a bit more adaptable à la Memojis, but that’s not in the current feature set. It will, however, respond to different facial expressions like smiling, raising your eyebrows and even sticking out your tongue (handy for Zoom work calls). The scan is also used to generate an image of your eyes for the EyeSight feature on the front of the visor to alert others in the room when you are looking in their direction.

After enrolling my second Persona, I surprised my colleague Sarah P. with a FaceTime call. I think “surprised” is the right word the first time a co-worker’s avatar calls you to shoot the shit. This is one of those places it’s easy enough to give Apple the benefit of the doubt.

I've found myself taking a few personas in a bid to create one that feels a bit truer to life. Honestly, though I can't tell I'm getting better or worse at it.

The monster in the room

Image Credits: Cory Green/Yahoo

The headset reportedly underwent an eight-year gestation period. That’s a couple of lifetimes in the land of consumer hardware. A new Vanity Fair piece covers the first time Tim Cook caught sight of what would eventually become the Vision Pro:

This same building, where Cook finds the industrial design team working on this thing virtually no one else knows exists. Mike Rockwell, vice president of Apple’s Vision Products Group, is there when Cook enters and sees it. It’s like a “monster,” Cook tells me. “An apparatus.” Cook’s told to take a seat, and this massive, monstrous machine is placed around his face. It’s crude, like a giant box, and it’s got screens in it, half a dozen of them layered on top of each other, and cameras sticking out like whiskers. “You weren’t really wearing it at that time,” he tells me. “It wasn’t wearable by any means of the imagination.” And it’s whirring, with big fans—a steady, deep humming sound—on both sides of his face. And this apparatus has these wires coming out of it that sinuate all over the floor and stretch into another room, where they’re connected to a supercomputer, and then buttons are pressed and lights go on and the CPU and GPU start pulsating at billions of cycles per second and . . . Tim Cook is on the moon!

There was, of course, a lot of work put in between then and now. The Vision Pro currently sitting on the desk in front of me as I type this is not the monster Cook describes. The passage does, however, get at something important: it might not be the most elegant piece of hardware the company has ever designed, but Apple is going to get you to the moon one way or another.

Screen time is about to get a whole lot more intense with the Apple Vision Pro, but only for about two hours.

Read @bheater's day two experience with the headset here: https://t.co/xMkcmzv8dq pic.twitter.com/QgaxGNBFAr

— TechCrunch (@TechCrunch) February 2, 2024

The aforementioned reports that the Vision Pro’s prolonged development cycle also suggested that the product’s release was expedited by antsy shareholders. The story goes that the powers that be were frustrated by the company’s overabundance of caution, leading Apple to ship a product that wasn’t quite what they had hoped. Many point to the external battery as the most glaring example of this phenomenon.

Certainly there is something about it that feels “un-Apple,” as it sits in your pocket while you work. Of course, it's still Apple, so it's a nice-looking battery as these things go. It is also seemingly a product of the limits of physics. Surely Tim Cook’s ideal headset has a battery baked in à la the Meta Quest — and one day in the not-so-distant future, it almost certainly will. But attempts to do so now would have resulted in an altogether different compromise. Either the battery life would be short or it would have been significantly heavier and hotter. Neither outcome is great.

Image Credits: Cory Green/Yahoo

Given that none of these options are particularly ideal, I would say Apple settled on the best one for this particular first-gen product. I’ve already seen reports of people experiencing headaches from the product’s current weight distribution (I highly recommend using the dual-loop band for better weight distribution). Sure, the battery hangs there like some vestigial tech tail, somewhat hampering mobility, but soon enough you’ll forget it’s there.

This itself can ultimately be an issue, if you decided you want to stand, as I did, halfway through my first demo. I got a slight jerk from the pack on doing so. Moral of the story, if you plan to do a lot of standing while wearing the headset, find a good spot for the battery. That’s either going to be a pocket or investing in the new genre of battery pack accessories currently cropping up. Fanny packs are overdue for a comeback.

The battery life will vary by an hour or so, depending on how you use the headset. Apple currently puts the stated time at around 2.5 hours. That means you can potentially start "Avatar: The Way of Water" with a full battery and still have 40 or so minutes left to go in the film. When using the device at home, however, this is largely mitigated by the USB-C port on the top of the pack. Plug in a cable and power adapter, and you don’t have to worry about running out of juice.

Passthrough

Image Credits: Apple

The first great consumer VR experience I can recall having involved an HTC Vive at, of all places, the Las Vegas Convention Center. It was the longest, hardest CES I ever covered. Just an absolute nightmare. I walked as much of the floor as humanly possible and filed 100 stories over the course of four days (I cannot attest to the quality of any).

As my time at the show was drawing to a close, I tried the Vive for the first time. Suddenly, I was underwater. A few moments later, a life-size blue whale swam by for a closer look. Momentarily coming eye to eye with likely the largest animal to ever exist on this planet was a humbling and genuinely transportive experience. It was a warm bath on a cold winter day that you know you have to leave at some point, but that doesn’t make it any easier.

In a piece I wrote about the Vision Pro earlier this month, I described the phenomenon of “Avatar Depression,” noting, “Not long after the film’s release, CNN reported on a strange new phenomenon that some deemed “Avatar Depression.” The film had been so immersive, a handful of audience members reported experiencing a kind of emptiness when they left the theater, and Pandora along with it.”

For all its various first-gen shortcomings, the Vision Pro offers glimpses of truly transformative brilliance. As I noted earlier this week in my piece on the power of mindfulness apps:

There are moments while using it that feel like a porthole into a different world and — just maybe — the future. It’s imperfect, sure, but it’s an undeniable accomplishment after so many decades of extended reality false starts. Using the headset for even a few minutes is a mesmerizing experience but, perhaps more importantly, offers tangible glimpses into where things are going.

Thus far, I’ve encountered multiple experiences that have given my brain the warm bath. This is partly because the Vision Pro is neither fully an AR or VR experience. It’s MR (mixed reality). The company is able to walk the line courtesy of passthrough technology, which utilizes front-facing cameras to capture an image of the world around you in as close to real time as is currently possible (the latency is around 12 milliseconds at present).

Passthrough is necessary due to the opacity of the visor (the same reason why the company relies on the EyeSight feature to let people around you know when you’re looking at them). The opaque visor is, in turn, necessary for a fully immersive VR-like experience.

Image Credits: Apple

The Vision Pro’s Passthrough is easily the best I’ve seen. Even here, however, there is a good bit of work to be done. It’s generally darker than the room you're in, with its own uncanny, grainy quality. You’re not going to mistake it for an actual room anytime soon, but for a first pass, it’s impressive what Apple was able to accomplish here.

There’s still some distortion that takes some getting used to. There were times, for instance, when I instinctively went to look at a notification on my Apple Watch or read a text message on my iPhone. This could have something to do with the fact that I required prescription lens inserts, but whatever the case, I couldn’t make out text on either. I’m sure Apple would simply prefer that you do all of your reading and other content consumption through visionOS when you have it on.

Stanford just published a study discussing some of the current limitations of passthrough, using Meta Quest headsets. The school’s Virtual Human Interaction Lab notes, “We conclude that the passthrough experience inspires awe and lends itself to many applications, but also causes lapses in judgment of distance and time, induces simulator sickness, and interferes with social connection. We recommend caution and restraint for companies lobbying for daily use of these headsets, and suggest scholars should rigorously study this phenomenon.”

Simulator or VR sickness is a very real phenomenon. In principle, it operates similarly to carsickness or seasickness, presenting when there is a disconnect between what your eyes and inner ears perceive. It’s easy to see how distortion and even a 12-milisecond lag can contribute to this. It’s a real enough thing to warrant an official Apple support page.

The company writes:

A small number of people may develop symptoms after viewing content with fast motion or while moving during use of their Apple Vision Pro. Symptoms of motion sickness include:

I’m not particularly thrilled to tell you that I found myself among that small group on my second day of testing. I wasn’t entirely surprised, as I tend to be very prone to motion sickness. Per Apple’s advice, I likely won’t be using the device on a plane any time soon.

Apple adds, “Reducing head motion, reducing visual motion, and avoiding high motion experiences can help minimize motion sickness. Some apps may require you to turn your head and neck frequently, and can cause motion sickness even in the absence of noticeable visual motion.”

My advice is simple: I know it’s tempting to use this as much as possible after plunking down $3,500+ on an exciting new technology, but ease into it. Take your time, take breaks, hydrate, recognize your limits.

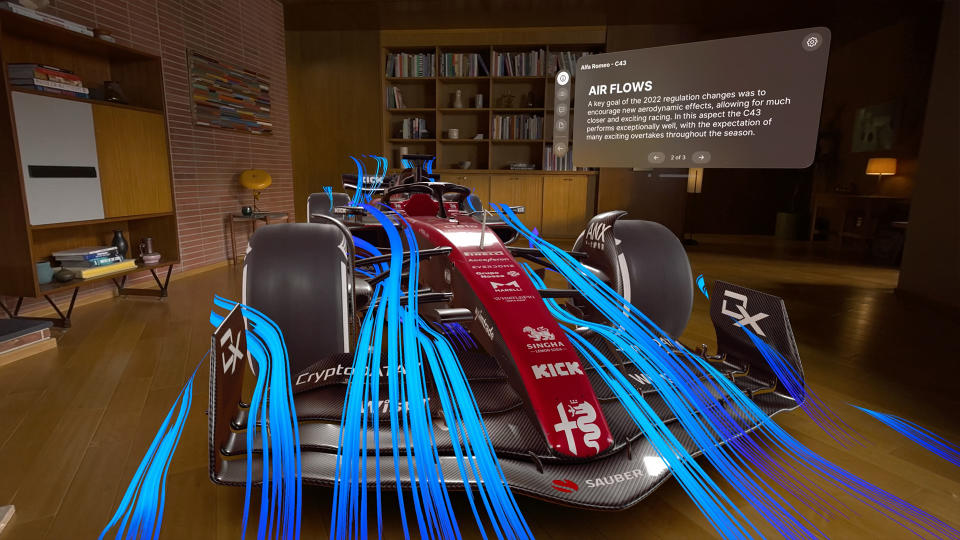

Environments and Apps

Image Credits: Apple

If visionOS is the infinite desktop, Environments are its wallpaper. They’re the most centralized place in which the immersion shines. Currently, Apple offers the moon (where Cook hangs out, per the Vanity Fair piece), Yosemite and the edge of a Hawaiian volcano, along with assorted scenes that are more color/vibes based (sort of like the extended reality version of Philips Hue scenes). If I were Apple, as the first person with a $3 trillion market cap, I would lean heavily into Environments as a way of silencing the doubters.

The 3D spatial landscapes, combined with the accompanying environment spatial audio, bring a genuine sense of serenity to Apple’s head-mounted computer. Using the large digital crown on the top of the visor, you can tune the environmental immersion up and down, bringing the sound of things like rain, wind, waves and crickets along with it. Apple also makes sure that people cut through the translucent veneer, so you’re fully aware when someone is in the room with you.

The experience is, however, not wholly seamless. Once again, things begin to fray around the edges when you look down at your hands to reveal that Zoom-like background artifacting.

If forced to choose three places I would like to visit through the power of extended reality, I would say: underwater, space and wherever it is the dinosaurs hang out. The latter is wonderfully illustrated by Encounter Dinosaurs, which builds on much of the work that went into Apple TV+’s terrific "Prehistoric Planet" to bring the ancient beasts into your living room.

While it's true that I’m a massive nerd, I have no shame in telling you that the demo app has brought me the purest sense of joy and wonder I’ve experienced with the Vision Pro thus far. Things begin with a curious butterfly that takes a meandering path toward you, before landing on your finger. It quickly flies off, back to where it came from. This process opens a large portal in the wall, revealing a craggy prehistoric landscape.

A small dinosaur emerges from beneath the rocks, drawn out by its own curiosity as the butterfly searches for its next landing spot. Soon, the serene scene evokes a sense of dread as a large T. rex-like carnivore enters the frame. Its interest in the smaller dinosaurs is quickly overshadowed by the human watching from the other side of the portal. Character AI makes it possible for the big dinosaur to lightly stalk you as it breaches the edges of the frame to get a closer look.

Apple tells me that it's seen a few Vision Pro users attempt to get a closer look themselves, only to come a bit too close to the actual wall for comfort. The headset does pop up an alert when you’re approaching an obstacle, but the coming days will no doubt be flooded with viral TikToks of users not heeding the warning. It’s the painful price of viral fame. O brave new world, that has such people in it.

One place I’ve barely scratched the surface on is content. In the lead-up to yesterday’s release, there were plenty of questions around whether Apple was beginning to lose developer support. Netflix, for one, signaled that it had no interest in making a visionOS anytime soon. It’s important to remember that while the first iPhone was considered a revolutionary device upon its release, it was the following year’s App Store debut that truly changed the game forever.

The company’s relationship with developers has been somewhat strained in recent years, due to concerns over fees, but since the App Store hit the scene, Apple has enjoyed a strong relationship with the community. There are times when it feels a bit like a high tech version Tom Sawyer’s fence, as third parties develop the content that turned the iPhone from a platform into a truly transcendent piece of technology.

Some of the initial doubt was mitigated by the fact that any iPadOS apps work on visionOS (there’s that ecosystem again), and Apple further silenced doubters by announcing Thursday that the device would launch with more than 600 optimized apps and games. Sometimes the difference between an optimized and non-optimized app comes down to some subtle UX changes. But the Vision Pro will ultimately live or die by the content that utilizes its unique form factor.

Watching a movie or playing a game on a virtual big screen is nice, as is the infinite desktop, but the Vision Pro’s success will greatly hinge on whether or not developers leverage the technology for experiences that simply can’t be had on an iPhone or iPad. I will have a lot more to say about my first-hand app experience in the coming weeks. For now, along with the dinos I will give special mention to Lego's AR experience and Apple's mindfulness app.

Otherwise, I spent a good bit of time playing around with iPadOS apps, prior to the headset's official launch. On the whole, they work well enough, though playing an Angry Birds or Fruit Ninja port only makes you long for a future release fully optimized for this form factor.

The next 10 years

Image Credits: Cory Green/Yahoo

When I look at my Persona, I sometimes have to squint to see myself reflected back at me. In some ways, this is an apt metaphor for the space the first-gen Vision Pro occupies. Once you really start engaging with the product, you begin to see the seeds of a truly revolutionary technology. Apple has, without question, pushed the state of the art.

More than anything, the Vision Pro is the first step in a much longer journey. The company will need to hit many important stops to get there, including scaling production to a point where the optics don’t make the system prohibitively expensive, making tweaks to Personas and passthrough and properly incentivizing the developers it encounters along the way.

Image Credits: Brian Heater

The Vision Pro is the best consumer extended reality headset. It’s also among the priciest. There are moments when you feel like you’re peering into the future and others when you understand those early reports that the company didn’t launch exactly the product it was hoping for. If you’ve got the money to spare, there’s an exciting time to be had — but hopefully one that will feel antiquated a generation or two down the road.

Image Credits: Apple

In the meantime, Apple would do best to focus much of its efforts on enterprise. The ability to perform surgery in AR, see a 3D map of a wildfire in real time or walk through a rendering of a concept car are precisely the experiences that make the technology a hit in the workplace. And lord knows corporations are willing to shell out money for something that could save them funds in the long run.

It's probably telling that, as I wrap up these 6,000 words, I feel like I'm only beginning to scratch the surface. There are still many features and countless apps to discuss. For a product like this that is as much an investment as purchase, it's important to give continued progress reports. My job here is far from over. The same can be said for Apple.